We focus on establishing a distributed Multi-Camera Tracking system in a camera network. To be specific, we focus on tracking pedestrians as our priority target. Multi-Camera Tracking is the critical underlying technology for building large-scale intelligent surveillance systems. Building such complex systems requires solving some of the hardest tasks in computer vision, including detection, single-camera tracking (also known as visual object tracking, multi-object tracking), inter-camera tracking and re-identification.

Related projects

Related papers

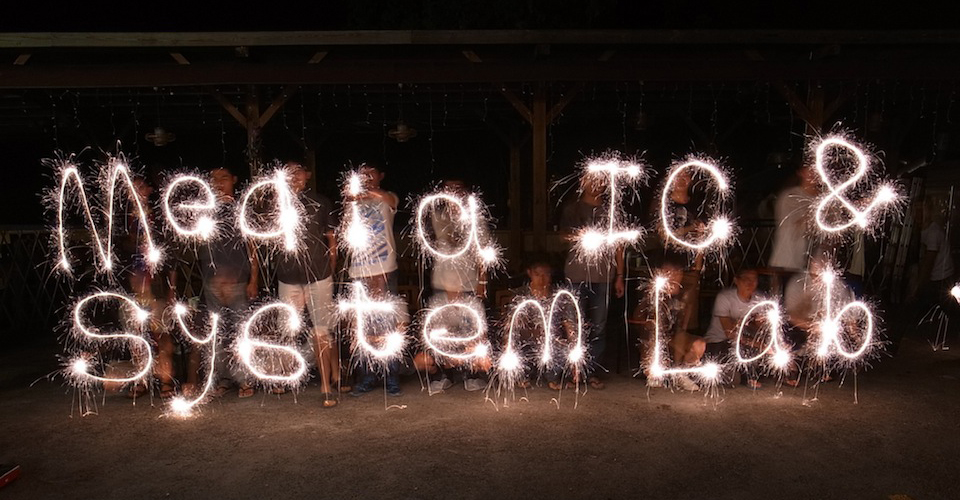

Estimating six degrees of freedom poses of a planar object from images is an important problem with numerous applications ranging from robotics to augmented reality. While the state-of-the-art Perspective-n-Point algorithms perform well in pose estimation, the success hinges on whether feature points can be extracted and matched correctly on target objects with rich texture. In this work, we propose a two-step robust direct method for six-dimensional pose estimation that performs accurately on both textured and textureless planar target objects. First, the pose of a planar target object with respect to a calibrated camera is approximately estimated by posing it as a template matching problem. Second, each object pose is refined and disambiguated using a dense alignment scheme. Extensive experiments on both synthetic and real datasets demonstrate that the proposed direct pose estimation algorithm performs favorably against state-of-the-art feature-based approaches in terms of robustness and accuracy under varying conditions. Furthermore, we show that the proposed dense alignment scheme can also be used for accurate pose tracking in video sequences.

See more about DPE

Estimating six degrees of freedom poses of a planar object from images is an important problem with numerous applications ranging from robotics to augmented reality. While the state-of-the-art Perspective-n-Point algorithms perform well in pose estimation, the success hinges on whether feature points can be extracted and matched correctly on target objects with rich texture. In this work, we propose a two-step robust direct method for six-dimensional pose estimation that performs accurately on both textured and textureless planar target objects. First, the pose of a planar target object with respect to a calibrated camera is approximately estimated by posing it as a template matching problem. Second, each object pose is refined and disambiguated using a dense alignment scheme. Extensive experiments on both synthetic and real datasets demonstrate that the proposed direct pose estimation algorithm performs favorably against state-of-the-art feature-based approaches in terms of robustness and accuracy under varying conditions. Furthermore, we show that the proposed dense alignment scheme can also be used for accurate pose tracking in video sequences.

See more about DPE

We propose a system for real-time six degrees of freedom (6DoF) tracking of a passive stylus that achieves submillimeter accuracy, which is suitable for writing or drawing in mixed reality applications. Our system is particularly easy to implement, requiring only a monocular camera, a 3D printed dodecahedron, and hand-glued binary square markers. The accuracy and performance we achieve are due to model-based tracking using a calibrated model and a combination of sparse pose estimation and dense alignment. We demonstrate the system performance in terms of speed and accuracy on a number of synthetic and real datasets, showing that it can be competitive with state-of-the-art multi-camera motion capture systems. We also demonstrate several applications of the technology ranging from 2D and 3D drawing in VR to general object manipulation and board games.

See more about DodecaPen

We propose a system for real-time six degrees of freedom (6DoF) tracking of a passive stylus that achieves submillimeter accuracy, which is suitable for writing or drawing in mixed reality applications. Our system is particularly easy to implement, requiring only a monocular camera, a 3D printed dodecahedron, and hand-glued binary square markers. The accuracy and performance we achieve are due to model-based tracking using a calibrated model and a combination of sparse pose estimation and dense alignment. We demonstrate the system performance in terms of speed and accuracy on a number of synthetic and real datasets, showing that it can be competitive with state-of-the-art multi-camera motion capture systems. We also demonstrate several applications of the technology ranging from 2D and 3D drawing in VR to general object manipulation and board games.

See more about DodecaPen

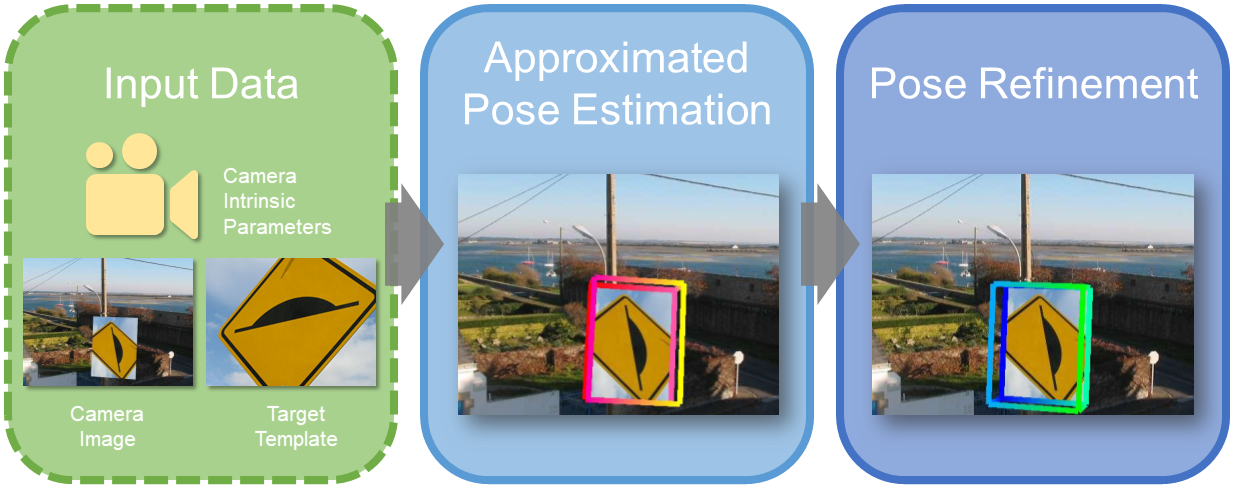

Accurately tracking the six degree-of-freedom pose of an object in real scenes is an important task in computer vision and augmented reality with numerous applications. Although a variety of algorithms for this task have been proposed, it remains difficult to evaluate existing methods in the literature as oftentimes different sequences are used and no large benchmark datasets close to real-world scenarios are available. In this paper, we present a large object pose tracking benchmark dataset consisting of RGB-D video sequences of 2D and 3D targets with ground-truth information. The videos are recorded under various lighting conditions, different motion patterns and speeds with the help of a programmable robotic arm. We present extensive quantitative evaluation results of the state-of-the-art methods on this benchmark dataset and discuss the potential research directions in this field.

See more about OPT Dataset

Accurately tracking the six degree-of-freedom pose of an object in real scenes is an important task in computer vision and augmented reality with numerous applications. Although a variety of algorithms for this task have been proposed, it remains difficult to evaluate existing methods in the literature as oftentimes different sequences are used and no large benchmark datasets close to real-world scenarios are available. In this paper, we present a large object pose tracking benchmark dataset consisting of RGB-D video sequences of 2D and 3D targets with ground-truth information. The videos are recorded under various lighting conditions, different motion patterns and speeds with the help of a programmable robotic arm. We present extensive quantitative evaluation results of the state-of-the-art methods on this benchmark dataset and discuss the potential research directions in this field.

See more about OPT Dataset

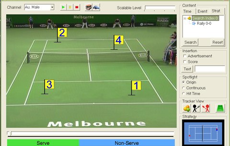

Tennis Real Play (TRP) is an interactive tennis game system constructed with models extracted from real game videos. The key techniques proposed for TRP include player modeling and video-based player/court rendering. For player model building, methods of database normalization and a transition model of tennis players are proposed. For player/court rendering, methods of clips selection, smooth transition in connecting clips, and a framework of combining 3D models with video-based rendering are proposed. Experiments show that vivid rendering results can be generated with low computation requirement. Moreover, the built player model can well record the ability and condition of a player, which can be used to roughly predict the results of real tennis games. In the user study of TRP, the results reveal that subjects identify with the contributions of increasing interaction, immersive experience and enjoyment from playing TRP.

See more about Tennis Real Play

Research on sports videos is interesting and full of challenges due to the increase in the number of game videos and the demand for video diversification. This paper proposes a new method for presenting sports videos. Tennis videos are used as an example for the implementation of a viewing program called as Tennis Video 2.0. By video processes of structure analysis, content extraction, and enriched video rendering, the presentation of sports videos has three properties---Structure, Interactivity, and Scalability. Structure allows people to browse game videos and watch highlights on demands. Furthermore, the proposed strategy search is a convenient way to find favorite hit patterns. Interactivity provides people with functions to watch enriched game video rendered in real-time. These functions can provide more enjoyment to viewers watching games. Scalability enables the video to be scalable in a semantic domain. Four different levels of video content are transmitted to accommodate different bandwidth limitations. In conclusion, the proposed sports video viewer allows people to watch games in a different way than previously possible.

See more about Tennis Video 2.0

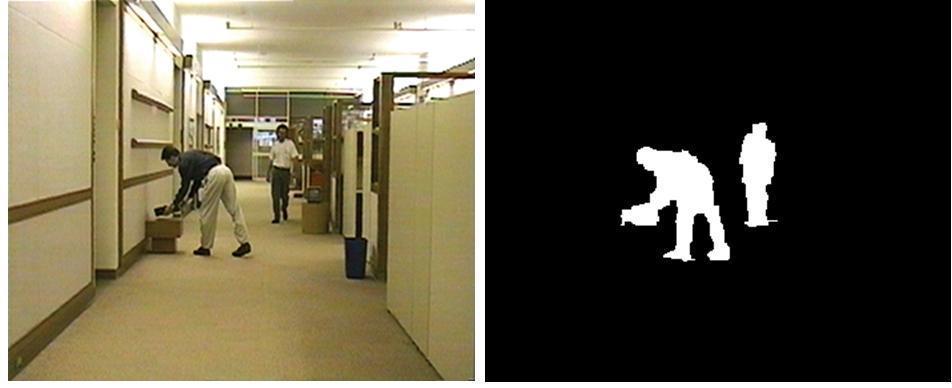

Segmentation, tracking, and description extraction are important operations in smart camera surveillance systems. A robust segmentation-and-descriptor based tracking algorithm is introduced here. Segmentation is applied first, and description for each connected component is extracted for object classification to generate the video object masks. It can do segmentation, tracking, and description extraction with a single algorithm without redundant computation. In addition, a new descriptor for human objects, Human Color Structure Descriptor (HCSD), is also proposed for this algorithm. Experimental results show that the proposed algorithm can provide precise video object masks and trajectories. It is also shown that the proposed descriptor, HCSD, can achieve better performance than Scalable Color Descriptor and Color Structure Descriptor of MPEG-7 for human objects.

See more about Surveillance System