Direct Pose Estimation for Planar Objects

Abstract

Estimating six degrees of freedom poses of a planar object from images is an important problem with numerous applications ranging from robotics to augmented reality. While the state-of-the-art Perspective-n-Point algorithms perform well in pose estimation, the success hinges on whether feature points can be extracted and matched correctly on target objects with rich texture. In this work, we propose a two-step robust direct method for six-dimensional pose estimation that performs accurately on both textured and textureless planar target objects. First, the pose of a planar target object with respect to a calibrated camera is approximately estimated by posing it as a template matching problem. Second, each object pose is refined and disambiguated using a dense alignment scheme. Extensive experiments on both synthetic and real datasets demonstrate that the proposed direct pose estimation algorithm performs favorably against state-of-the-art feature-based approaches in terms of robustness and accuracy under varying conditions. Furthermore, we show that the proposed dense alignment scheme can also be used for accurate pose tracking in video sequences.

Paper

Paper (20.7 MB)

Citation

Po-Chen Wu, Hung-Yu Tseng, Ming-Hsuan Yang, and Shao-Yi Chien, "Direct Pose Estimation for Planar Objects." Computer Vision and Image Understanding, 2018.

Bibtex

@article{DPE2018,

author = {Wu, Po-Chen and Tseng, Hung-Yu and Yang, Ming-Hsuan and Chien, Shao-Yi},

title = {Direct Pose Estimation for Planar Objects},

journal = {Computer Vision and Image Understanding},

year = {2018}

}Download

Code

Datasets

| Synthetic Image Dataset | Resolution: 800 ✕ 600 |

|---|---|

| Focal Length fx | 1000.0 |

| Focal Length fy | 1000.0 |

| Principle Point cx | 400.5* |

| Principle Point cy | 300.5* |

| Templates | |

| Datasets | |

| Poses | |

| README |

Notes

- You can check the file size by moving your mouse over the corresponding download icon.

Results

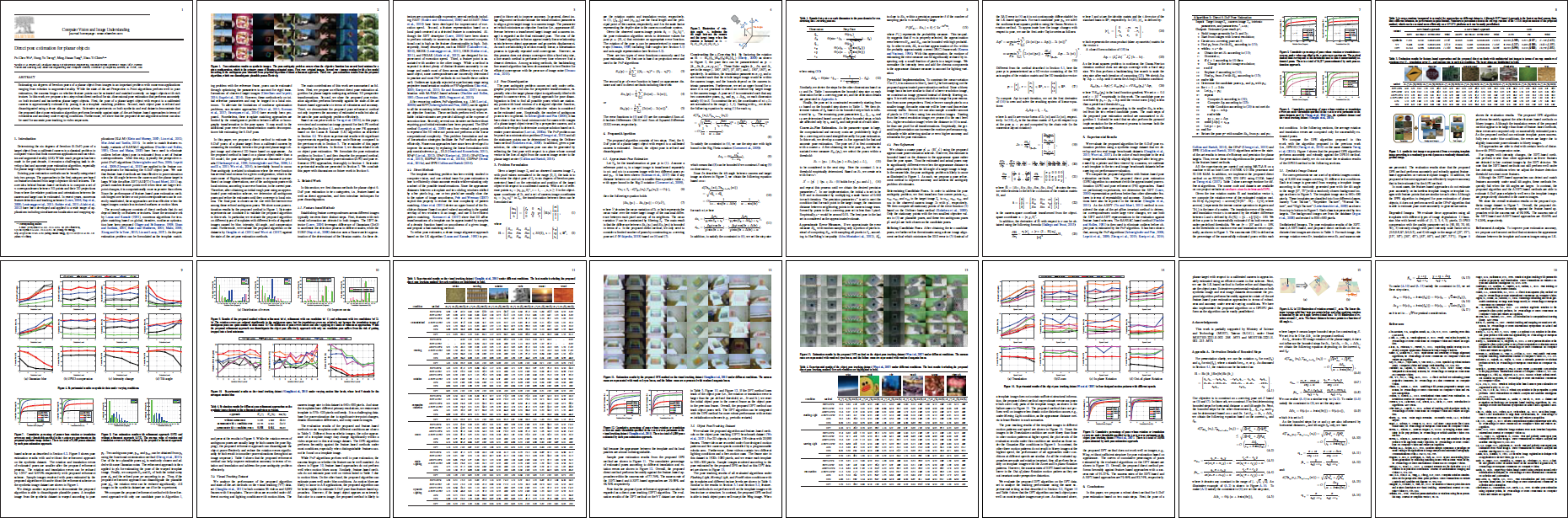

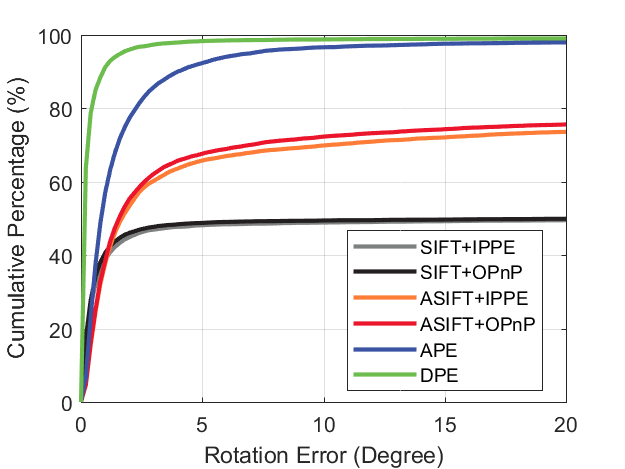

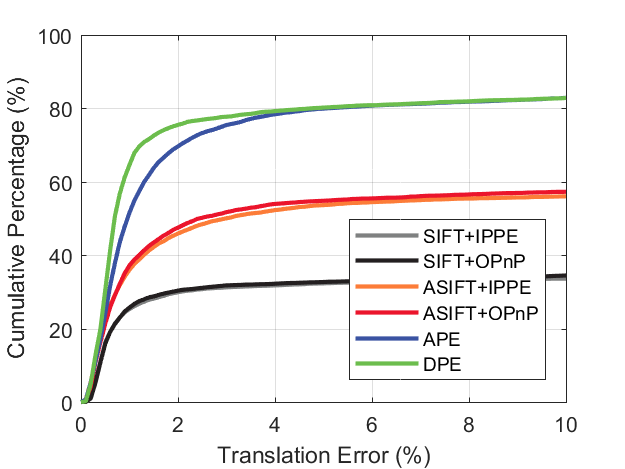

• Synthetic Image Dataset

Cumulative percentage of poses whose rotation or translation errors are under thresholds specified in the x-axis over experiments on the proposed synthetic image dataset. There is a total of 8,400 poses estimated by each pose estimation approach.

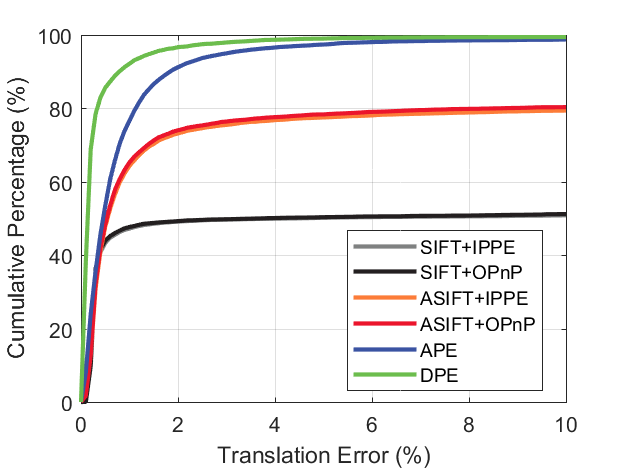

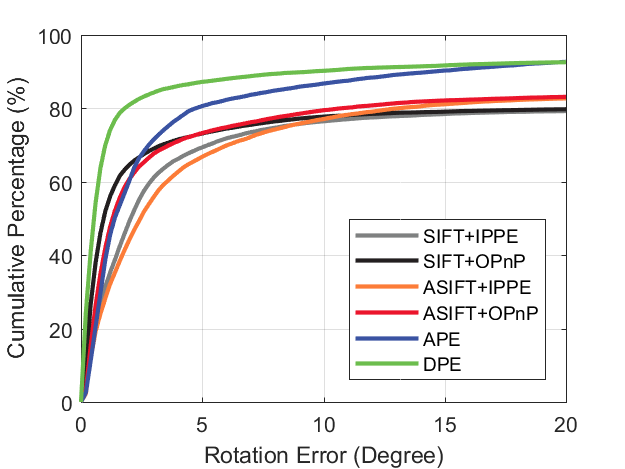

• Visual Tracking Dataset

Cumulative percentage of poses whose rotation or translation errors are under thresholds specified in the x-axis over experiments on the visual tracking dataset. There is a total of 6,889 poses estimated by each pose estimation approach.

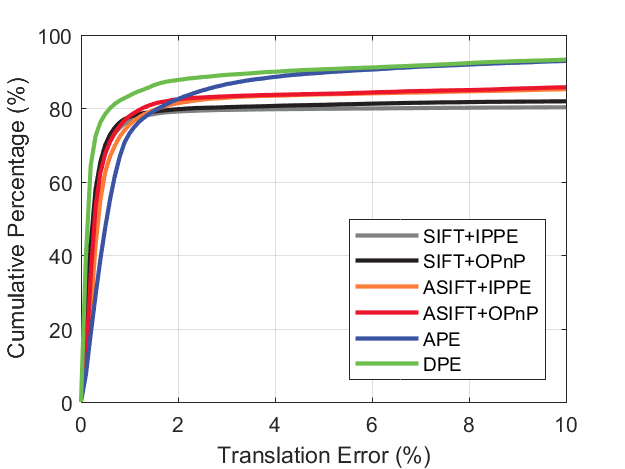

• Object Pose Tracking Dataset

Cumulative percentage of poses whose rotation or translation errors are under thresholds specified in the x-axis over experiments on the object pose tracking dataset. There is a total of 20,988 poses estimated by each pose estimation approach.

• Textureless Planar Object Pose Tracking

References

- Gauglitz, Steffen, et al., "Distinctive image features from scale-invariant keypoints." IJCV, 2011.

- Wu, Po-Chen, et al., "A Benchmark Dataset for 6DoF Object Pose Tracking." ISMAR Adjunct, 2017.

- Lowe, David G, "Distinctive image features from scale-invariant keypoints." IJCV, 2004.

- Yu, Guoshen, and Jean-Michel Morel, "ASIFT: An algorithm for fully affine invariant comparison." IPOL, 2011.

- Zheng, Yinqiang, et al., "Revisiting the pnp problem: A fast, general and optimal solution." ICCV, 2013.

- Collins, Toby, and Adrien Bartoli, "Infinitesimal plane-based pose estimation." IJCV, 2014.

- Tseng, Hung-Yu, et al., "Direct 3D pose estimation of a planar target." WACV, 2016.